Statistically significant

A short fictional story about being an evil data scientist

Author’s note: I was recently inspired reading Randy Au’s excellent post about being an evil data scientist to explore this concept through a short story. All details and names are fictionalized.

I’m watching our monthly town hall. I have the live stream full screened on my monitor, but if I’m being honest, 40% of my focus is still on the incoming Slack messages popping up on my laptop screen.

The CEO just shared that they’re launching the new homepage re-design. There’s a slide with a picture of everyone who contributed to that project. My face is there.

We ran an A/B test of our new homepage re-design, and users who received that experience had 23% higher retention. That means for every 100 new users who come to the site, 23 who would’ve left before now continue to transact.

I stop composing my Slack DM… did she just interpret my 23% relative increase number as an absolute percentage point increase? I told them a million times: the control group had 10% Week 2 retention and the re-design group had 12.3% Week 2 retention, a 23% relative increase, not absolute. For every 100 new users, the additional users we keep with the redesign is closer to 2, not 23.

The results were statistically significant.

I chuckle. I just spent the last two weeks trying to fake the results in a convincing way, and I forgot that leadership’s grasp on percentages is at the level of the sixth graders I used to tutor over the summers. They’re making this too easy.

I grew up in New Haven, so you could say I was destined to go to Yale. I majored in economics with a minor in psychology, though I spent most of my time watching Martin Scorsese movies and drinking Jack Daniel’s. I still got a 3.8 GPA.

My first job out of school was at a no-name management consulting firm. I’m not even going to say which firm because it’s embarrassing. McKinsey rejected me, and I’m still pissed about it. They hired Peter from my year instead, a champion debater, high school valedictorian, and total idiot.

Most of my fellow associates at the time thought management consulting was about Excel shortcuts and Keynote templates. I learned pretty quickly the best management consultants had just one motto: make the client look good.

Looking back, I guess this is when I really started to develop my career in data analytics. I learned, for example, that using secondary axes is a great way to make a trend look more hockey stick than it actually is.

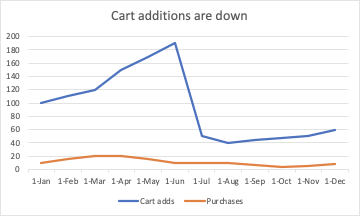

Conversion is also a really easy metric to fudge to get what you’re looking for. I had this one client ask us to analyze the effectiveness of their new checkout flow, which was abysmal. I think the overall number of people who added things to their cart actually went down, but no biggie. We just defined the metric of success to be conversion from cart add to purchase, which was, of course, much higher, because there were fewer cart adds overall.

Every client I worked for was crushing it, according to my charts. Needless to say, every client wanted to hire me back. I was being fast-tracked for partner at my firm, but I was done kissing my manager’s ass. No, I knew there was a much faster way to get to a sufficiently senior position where my intelligence would actually be appreciated. I enrolled in the Data Science Institute at Columbia University. I was going to be a data scientist.

A year after we met at Columbia, Casey dumped me for a hip hop music producer named Rob she met at some music industry conference. When we were together, she told me not to worry about him. I even went out to drinks with the guy a few times. Huge tool.

I can confirm he’s a loser, too. After graduating from Columbia, I worked at a robovesting startup that was pretty popular among millenials. Sure enough, Rob had an account. Since I was a data scientist, I had direct access to nearly all customer data. Turns out Rob only had like $11k in assets with us.

Getting Rob’s social security number wasn’t that hard. We pulled credit reports for our customers as part of our services and stored their encrypted SSNs in our database. We had a few reports that contained decrypted SSNs (e.g. tax documents) that used a python decryption script. I just had to run a query to get Rob’s encrypted data, assume production credentials locally, and run the decryption script.

I did this for Casey’s own good. Sooner or later she would have found out that Rob had no financial future. Even if I didn’t post his SSN anonymously on 4chan, it was probably just a matter of time before he got himself hacked. I pulled his account password because I was curious, and it was “Password1”. I wasn’t that surprised. I mean, the dude had a tattoo of a goldfish on his right bicep.

A year later, I was tapped for the company’s homepage re-design project. The project was personally sponsored by the CEO, largely inspired by her own personal gripes with the design. Finally, something more interesting than another data report for Citibank.

In the first debrief meeting, the CEO said this was her #1 priority, and she wasn’t kidding. They brought in a hotshot product manager from Twitter to run it, and his first action was to drop a million bucks off the bat for a fancy design agency. Six months of dev time later, they said they would be very “data-driven” and that roll-out was subject to the rigorous standards of A/B testing. We’d bucket 50% of new users into the old home page design (A group) and the other 50% into the new design (B group). Whichever group had statistically significant higher Week 2 retention would be the winner.

The intentions of the A/B test were noble, but I knew better. Given how much money and eyeballs were already invested, failure was not an option for this project. We just had to be careful about how we defined success.

Finessing the results of an A/B test was a bit trickier than anything I had to do at my previous consulting gig. A lot of my colleagues had at least some experience in data, so a lot of my chart visualization tricks wouldn’t work on them.

Over lunch one day, one of my data scientist colleagues mentioned another A/B test they were about to launch. Our product had a free version that was accompanied by these eye-sore banner ads advertising hair-loss products and vitamin subscription boxes. They were going to run an experiment removing these advertisements from the homepage entirely to understand the cost of ads on retention.

This gave me the opportunity I was looking for. If I could somehow force these two tests to collide and bucket a large portion of B users in my A/B test (the ones who get the new home page design) into the B group of my colleague’s test (the ones who get ads removed), I was pretty sure we’d see the retention uplift we were looking for.

My colleague informed me they were bucketing users using a very simple scheme. User ids with an even last digit received ads and user ids with an odd last digit didn’t receive ads. All I had to do was suggest the same exact bucketing scheme to my product team and we’d have pretty tight correlation with the ad experiment as long as we launched at roughly the same time. The ad product team and the home page product team were pretty far removed in the org, so I wasn’t super concerned they would talk to each other.

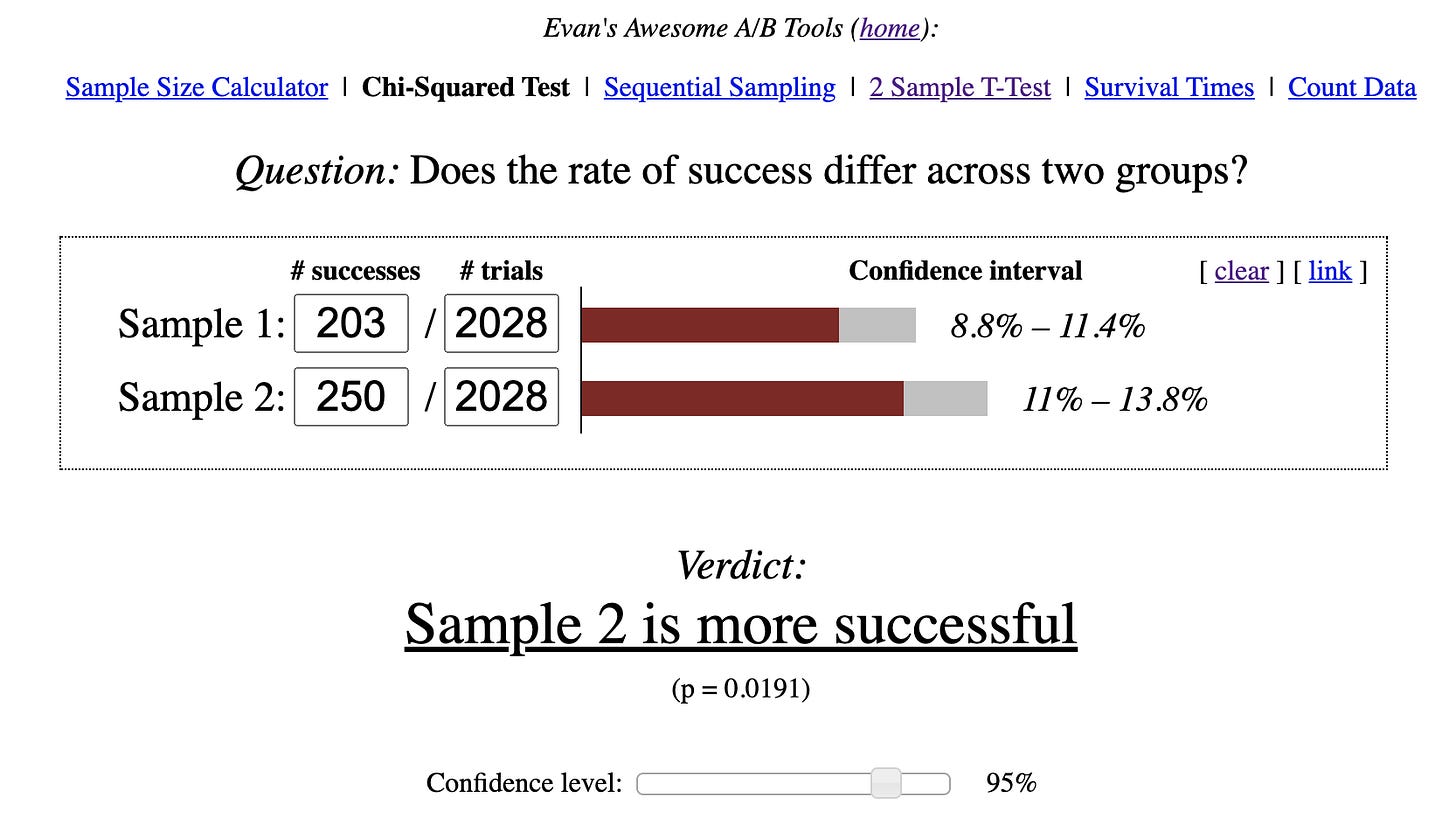

We launched the test, and after two weeks, the results were pretty clear. 2,028 users joined within the first week of the test. Of the 1,014 who saw the old home page design, 203 used the site again in the second week (10% retention). Of the 1,014 who saw the new home page design, 250 used the site again in the second week (12.3%). A simple chi-squared test confirmed the result was statistically significant (p < 0.05):

A week later, we rolled out to 100%. The PM and I got coffee later that month. He admitted he was surprised with the results; most re-designs from his past experience didn’t result in significant increases in retention. I told him it must have been a really good re-design. He smiled, thanked me for my help, and we left it there.

I’m interviewing at Google for a senior data scientist manager. The robovesting startup got acquired by one of its larger banking partners. Good for my equity, but I know how slow review cycles can be at banks and I wasn’t about to wait two years for my next promotion.

My interviewer looks like Obi-Won Kenobi (the Alec Guinness one, not the Ewan McGregor one). He looks like he still uses the back of a stats textbook to look up critical values for normal distributions. I’m hit with fifteen minutes of stats brain teasers:

What is the difference between ridge regression and lasso?

What is bias-variance tradeoff?

What is in-sample and out-of sample error?

After I show Obi-Won that I’m not a noob, he starts asking me about my projects.

Tell me about a recent project you worked on.

Well, I was a part of a large-scale home page re-design that drove a 23% increase in Week 2 retention.

Wow that’s impressive. What kind of statistical test did you run?

Chi-squared.

And what was your p-value?

0.0191.

When can you start?

Hilarious. Well done, Kenny!